Resolve ChatGPT Blank Screen Issue with these 5 Methods

Experiencing a ChatGPT blank screen can be distracting and frustrating at times. Discussing this common issue among AI enthusiasts, we will try to find a solution to this problem.

ChatGPT is a powerful text-to-text AI model that can impress you with its intelligence while answering your queries. However, ChatGPT blank screen is reported as a common issue among the users where they experience a blank screen in between a conversation with ChatGPT. Of course, this is not a pleasant experience and the users want to know the reasons and fix the issue. And that is exactly what you are here for. So let’s get started.

The Impact of ChatGPT Blank Screen on User Experience

ChatGPT has been under the limelight since it was launched, as it was the first one of its kind. Since everyone wanted to experience the new thing, the AI model gained an immense amount of users which could be beyond the predictions of the team OpenAI. This could be a possible reason for ChatGPT technical issues and errors now and then. However, these issues, causing hindrance and inconvenience for the users, should be tried to be fixed at the first priority.

Reasons for the ChatGPT Blank Screen

ChatGPT blank screen is a similar issue which can cause frustration and inconvenience among the users. Its exact reason is unknown, however, it few possible reasons are suggested by the users. According to some users, a ChatGPT blank screen can appear when the server is overloaded or experiencing technical difficulties. Some other users suggest the possible reason for ChatGPT blank screen could be a question the AI doesn’t want to answer. It doesn’t seem realistic but it was experienced multiple times when such questions were asked, the AI model hid itself behind the shade of a blank screen.

5 Ways to Fix ChatGPT Blank Screen

You can try these methods to fix the ChatGPT blank screen.

1. Check your Internet Connection

The very first thing you need to look for is an internet connection. Check if your device is connected with a stable internet connection.

2. Restart the Page

Simply try to restart the website or the page. This can fix the temporary ChatGPT blank screen issue.

3. Clear your Browser Cache

Cache data is stored within the browser while surfing the web. This can also cause CHatGPT to blank screen oftentimes. Simply clearing the browser cache could also be an option.

Simply go to browser settings and head “Privacy and security” and select “Clear Browsing Data”. Leave the “Cache image and files” checked while unchecking all the other options. Click on clear data. The issue would be most probably resolved after this.

4. Change Browser

Another thing you can do is switch the browser. Try using ChatGPT on another browser which can potentially fix the issue.

5. Check the Extensions

Browser extensions can sometimes interfere with the web application. Try disabling the extensions you added recently.

6. Contact Support

If none of the above methods are working, you still have an option to contact the support team of OpenAI and share with them the issue you are fixing. They would probably be a helping hand for you in this regard.

Conclusion

ChatGPT was in the limelight even before it was released. Since it’s the first one of its kind, it gained an immense number of users in no time. This, however, sometimes causes technical problems and issues due to overuse of the service. One of the issues users face while using the AI model is the ChatGPT blank screen. This can cause distraction and frustration among the users. However, it comes with several fixes which you can try to get back on track.

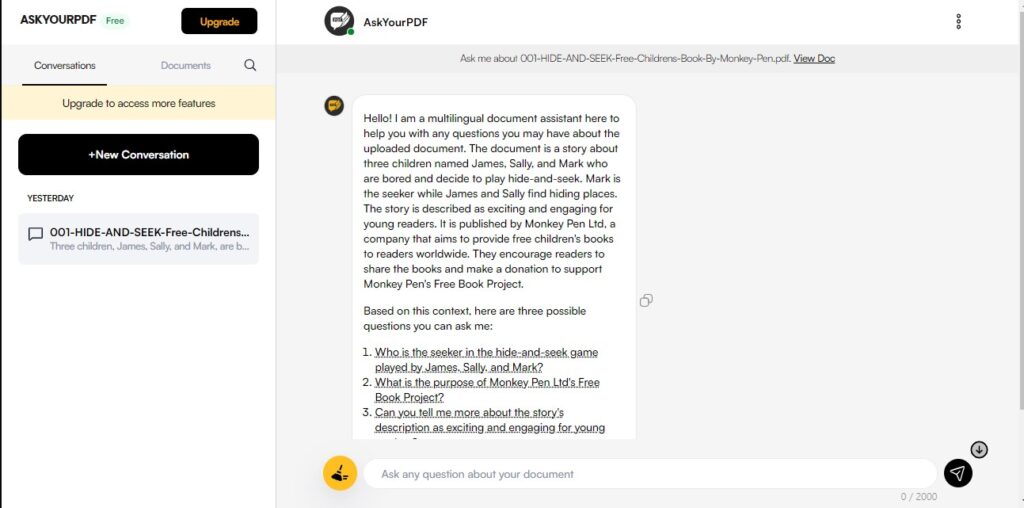

Also Read: How AskYourPDF Website Can Answer All Your Queries from a PDF Document