Here are 5 Amazing Stable Diffusion Men Models You Should Try

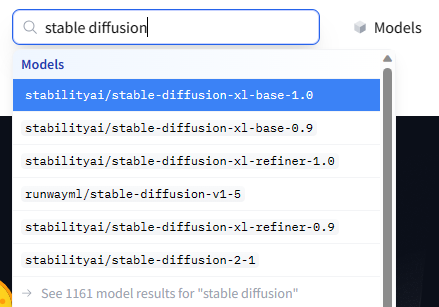

Stable Diffusion is a popular text-to-image AI model that can turn your generative thoughts into actual images. One of the features that sets it apart from its competitors is it comes with 1000+ custom models which you can use to boost your creativity. In this blog, we will be discussing 5 best Stable Diffusion men models which are perfect if you are looking to generate male characters. We will also discuss the workings of each model in detail. So let’s get started.

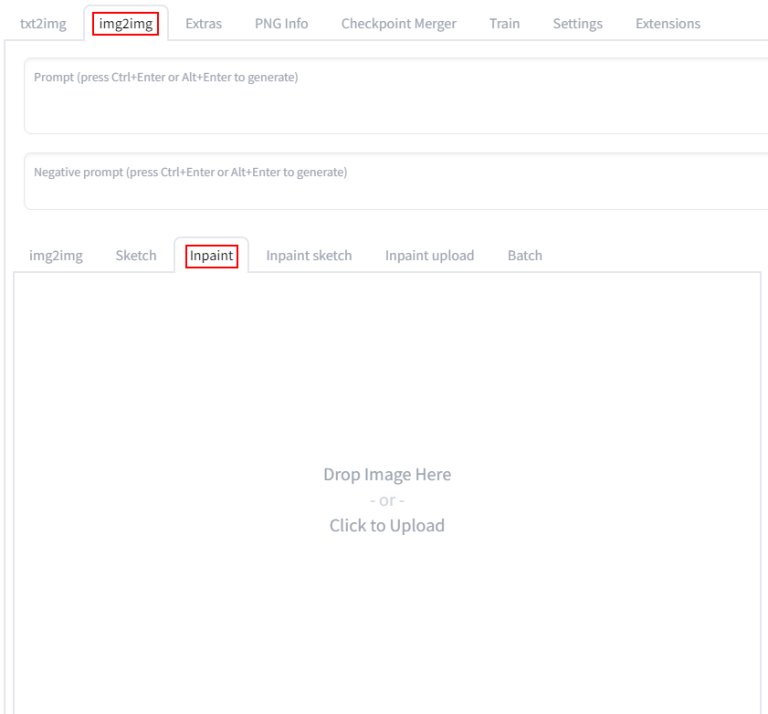

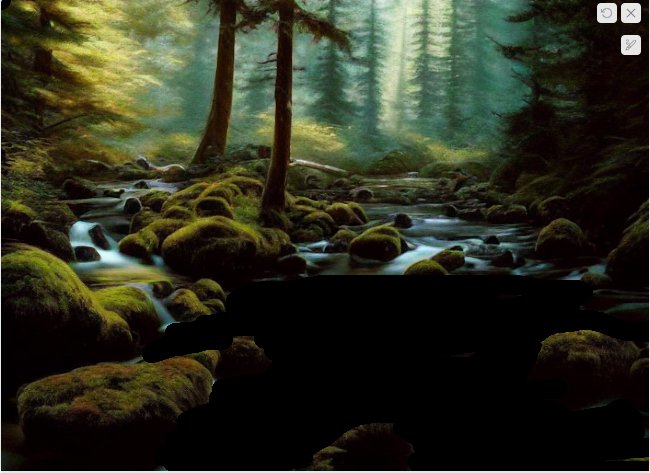

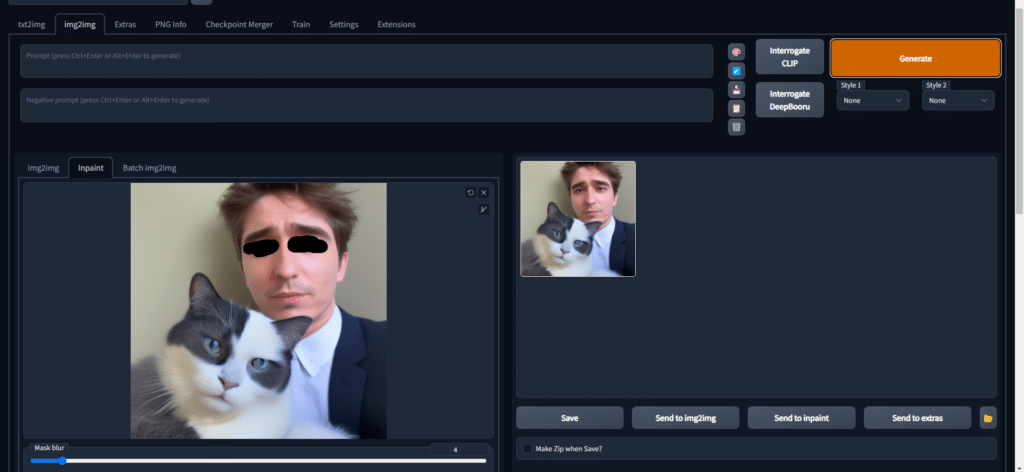

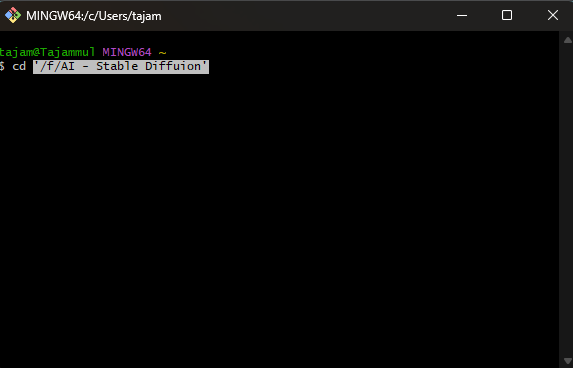

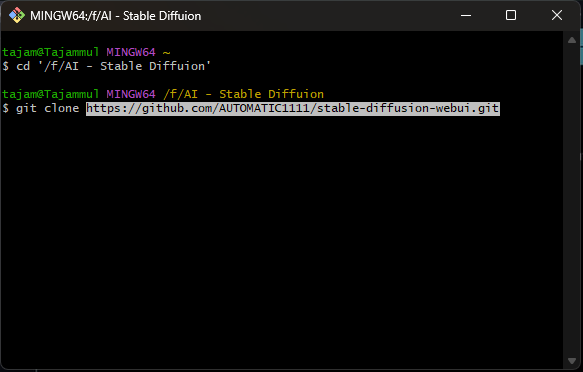

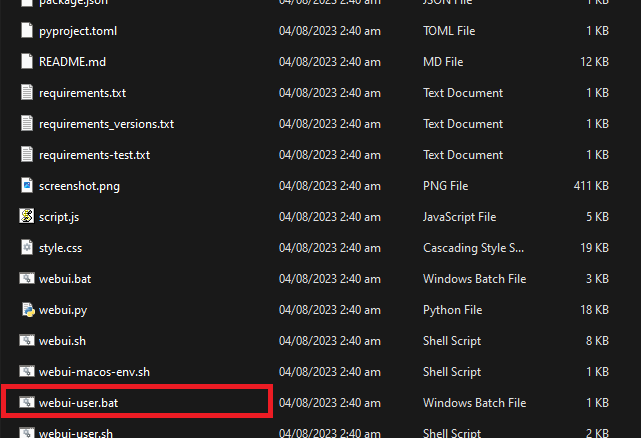

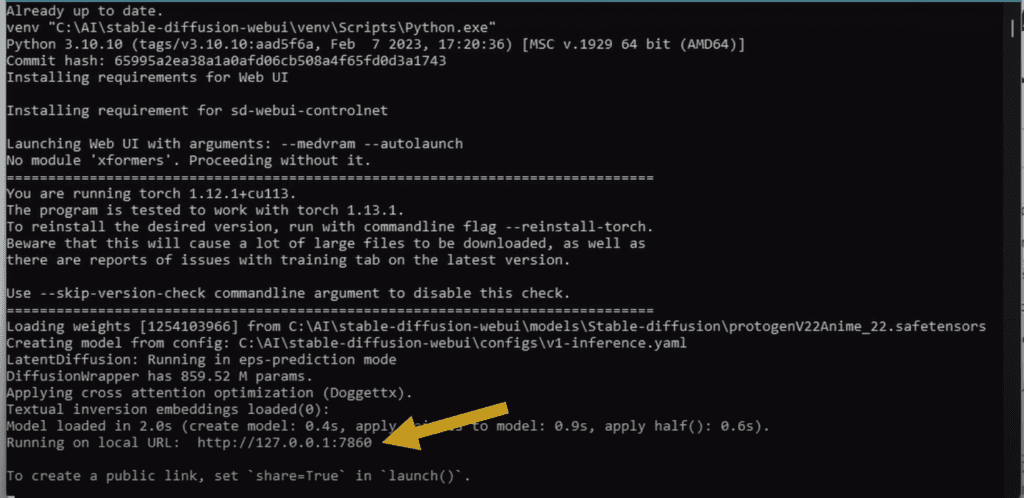

Set Up Stable Diffusion WebUI

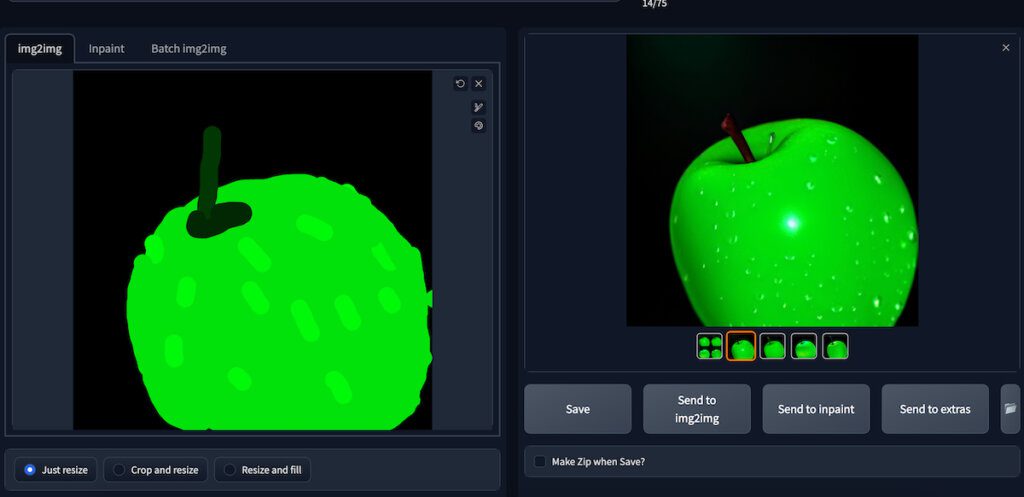

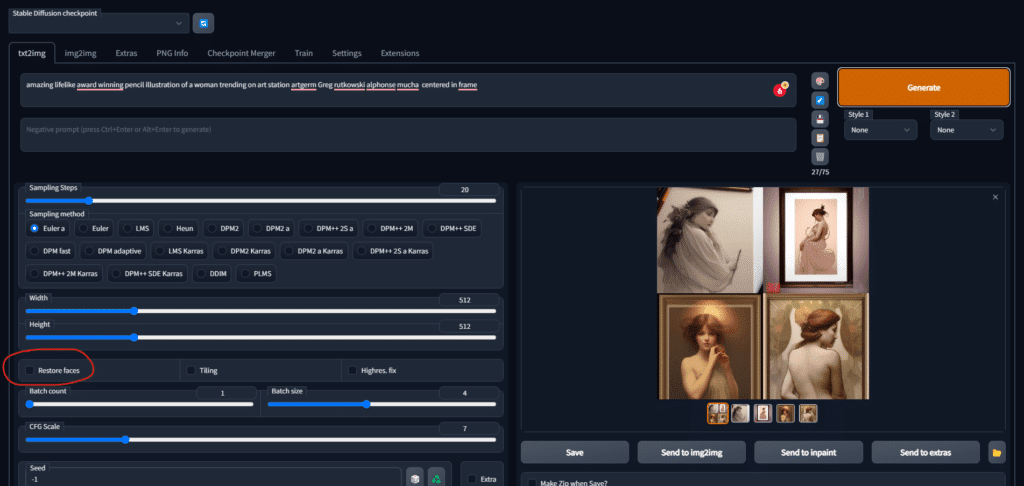

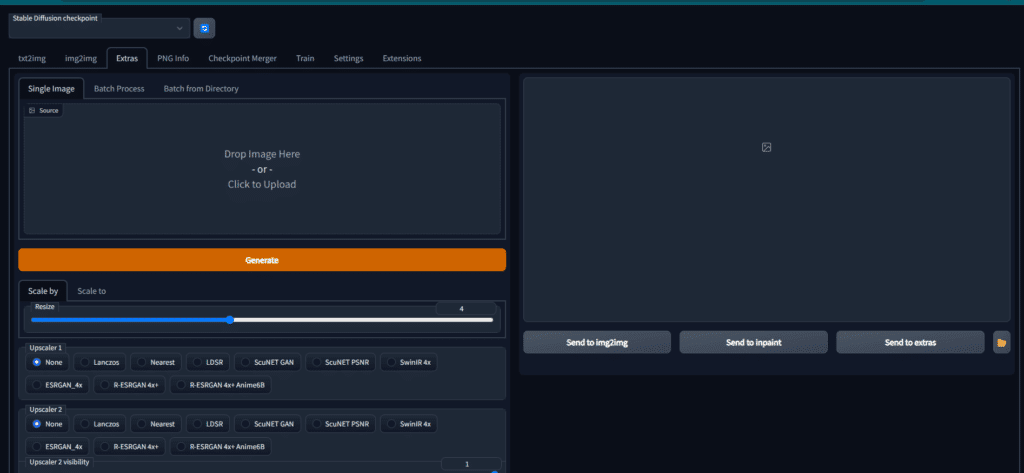

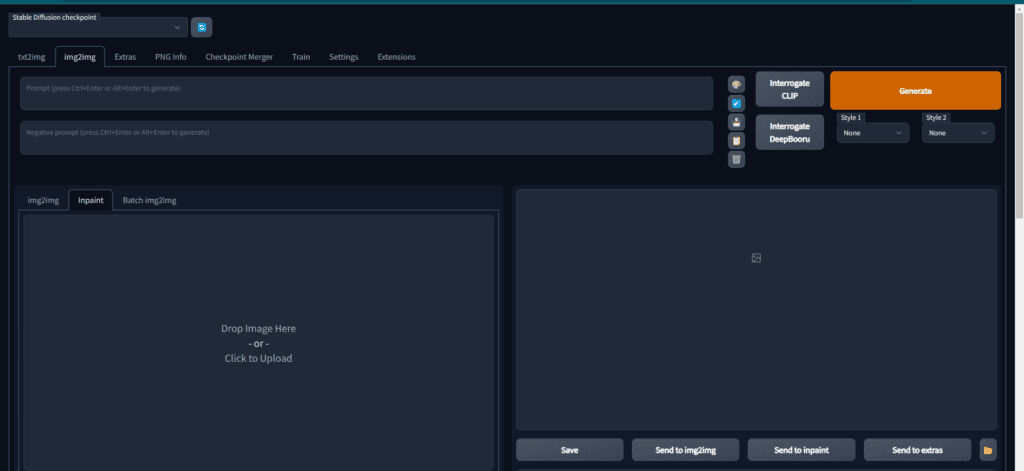

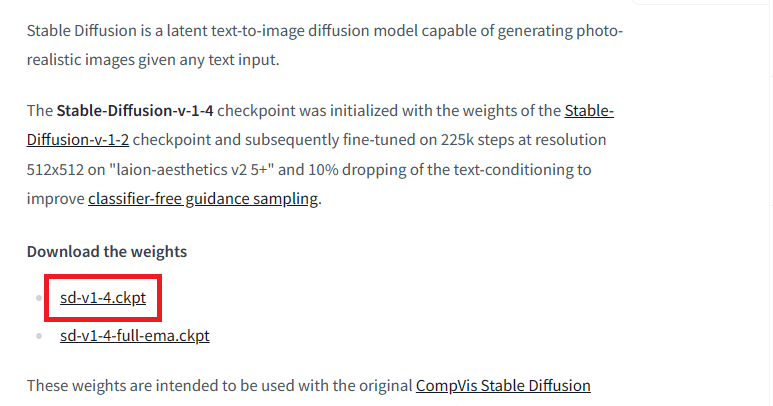

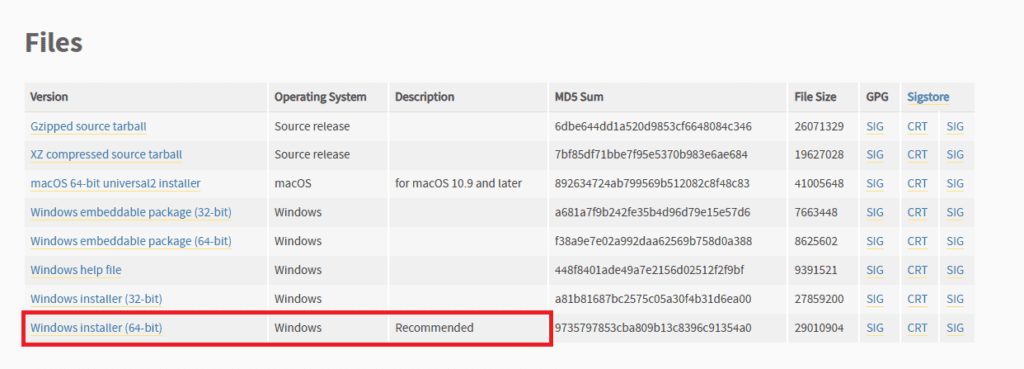

The first thing you need to experience Stable Diffusion men models is Stable Diffusion WebUI. Following a few simple steps, you can install Stable Diffusion on your own machine. Once you have installed Stable Diffusion on your PC, you can try these Stable Diffusion men models for ultimate creativity and fun.

Stable Diffusion Men Models

Here is the list of the 5 Best Stable Diffusion Men Models

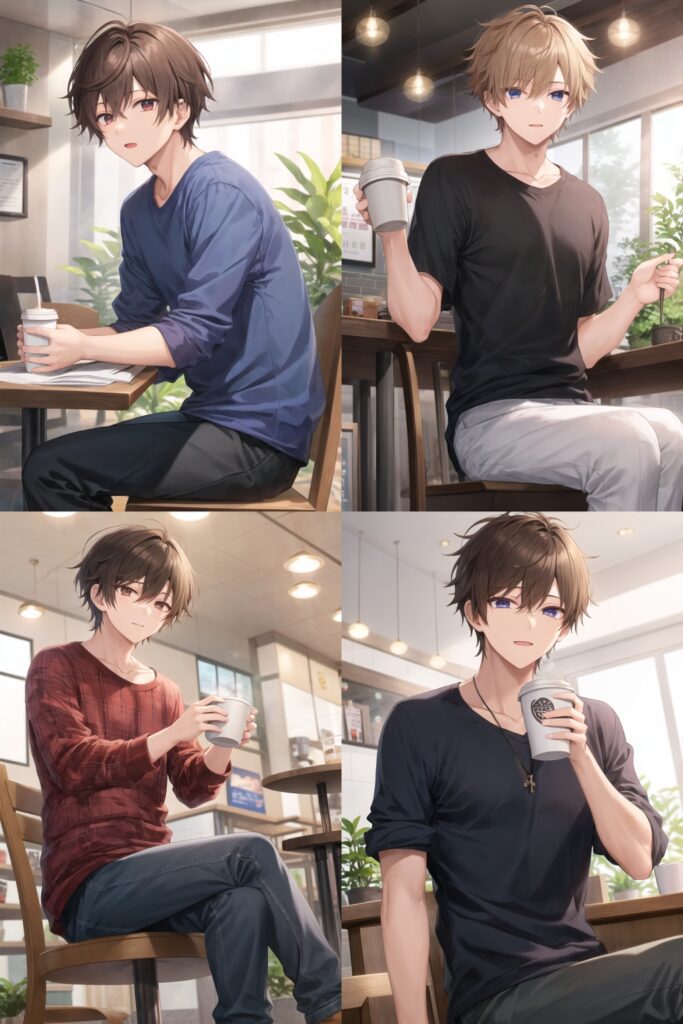

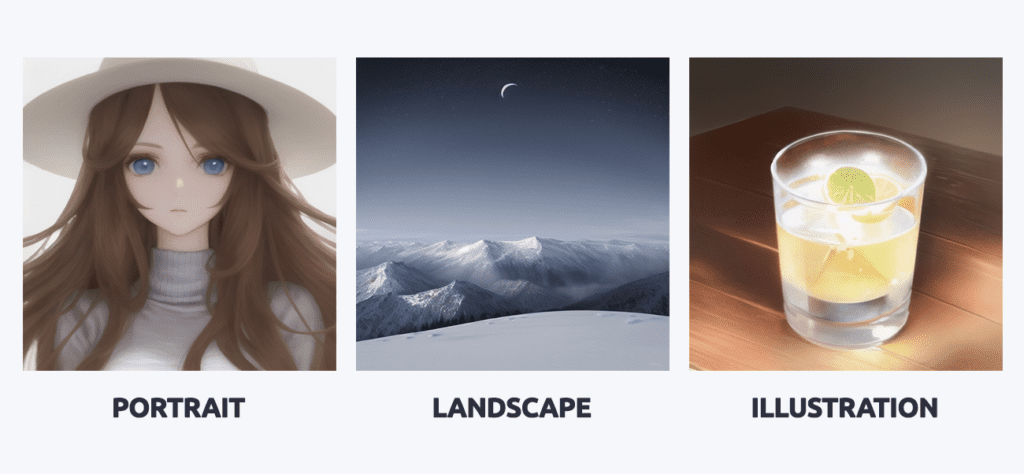

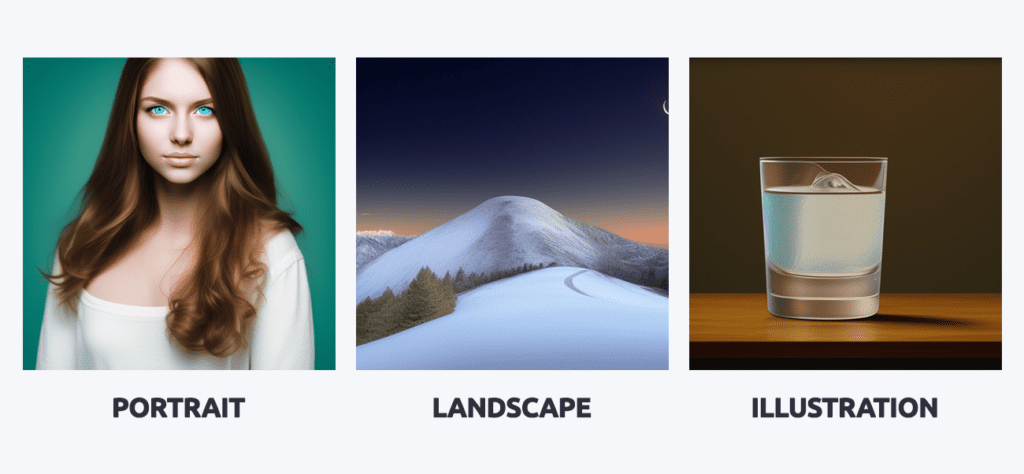

BlueBoys_2D

As the name suggests, this model creates 2D anime-style male images according to your input prompts. The model focuses on simple, clear, and flat 2D style designs with vibrant and clear colors. If you are a 2D anime fan, this model is going to be a good treat for you.

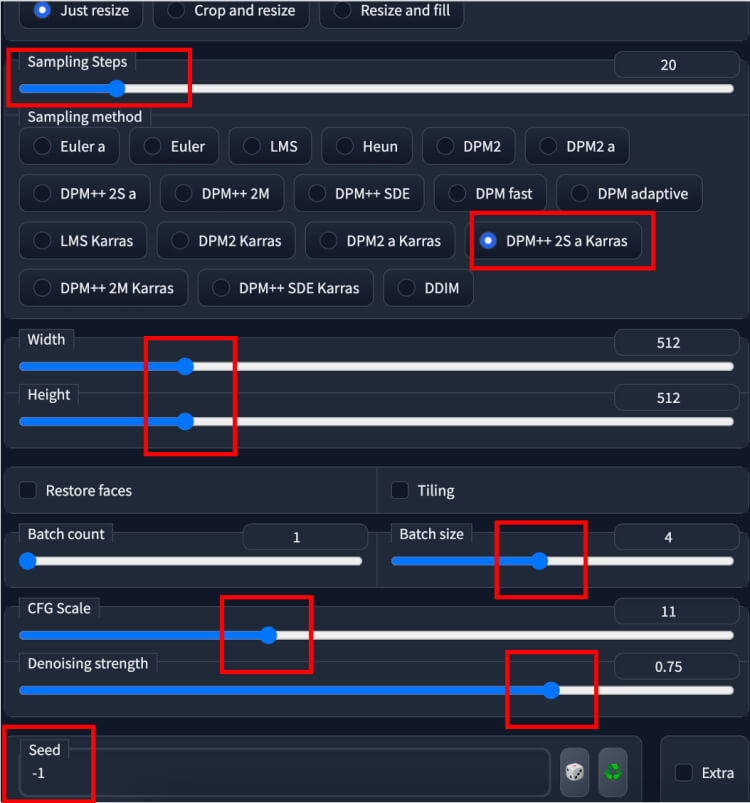

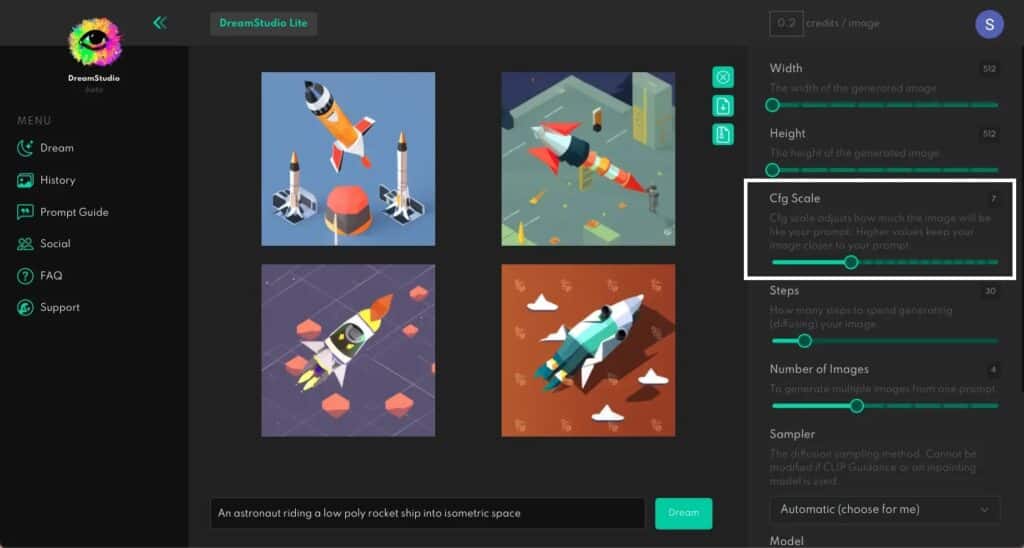

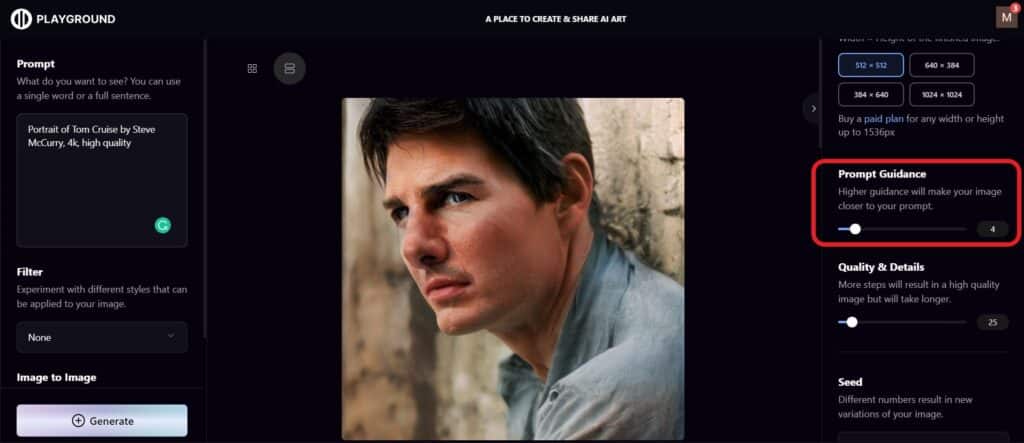

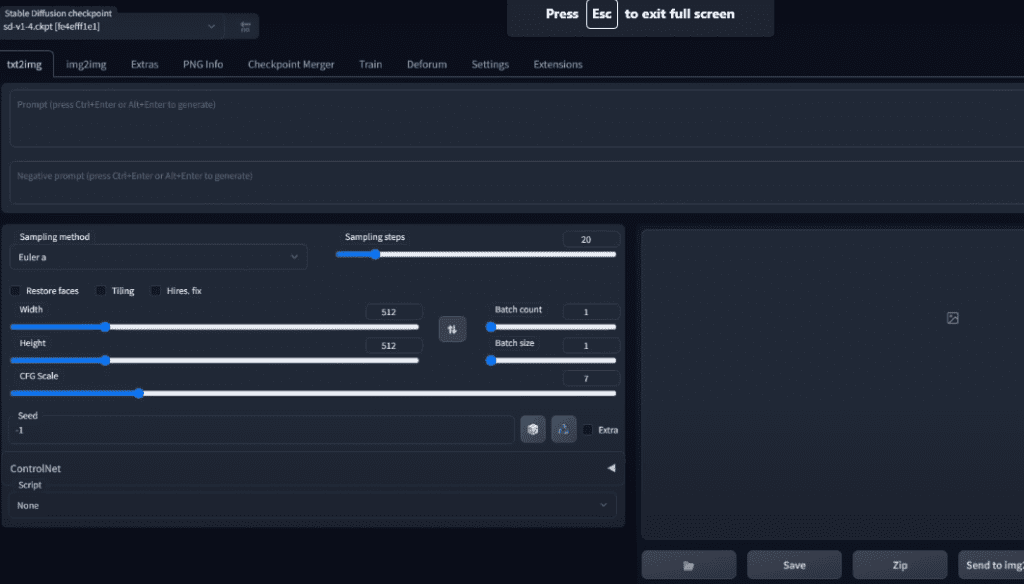

Here are a few recommended settings for BlueBoys_2D to best work for you. Keep sampling method of Eular a / DPM++ SDE Karras, a clip skip of 2, and Hires. fix upscaler of R-ESRGAN 4x+Anime6B. Additionally, a CFG scale of 7 to 11 and a VAE of vae-ft-mse-840000-ema-pruned / kl-f8-anime2 will work best in most of the cases.

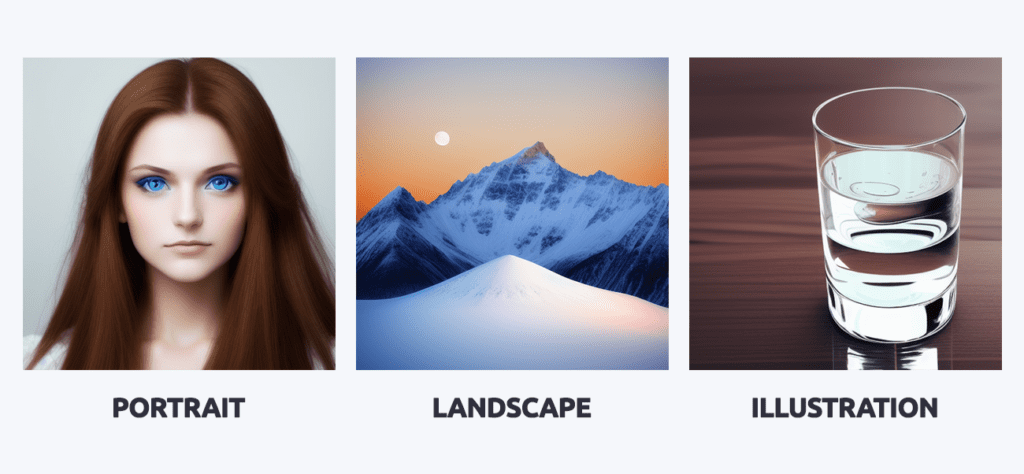

The Three Kingdoms

The Three Kingdoms is one of the best Stable Diffusion men models for those who love fairy tale, ancient, king-type characters. The model will provide you with an output of characters that are similar to classic villains or heroes.

One of the best parts is, that The Three Kingdoms is being updated on a regular basis. If you are not satisfied with the results of your initial attempts, you can always try later. Additionally, the model doesn’t need any trigger words, which makes it really user-friendly.

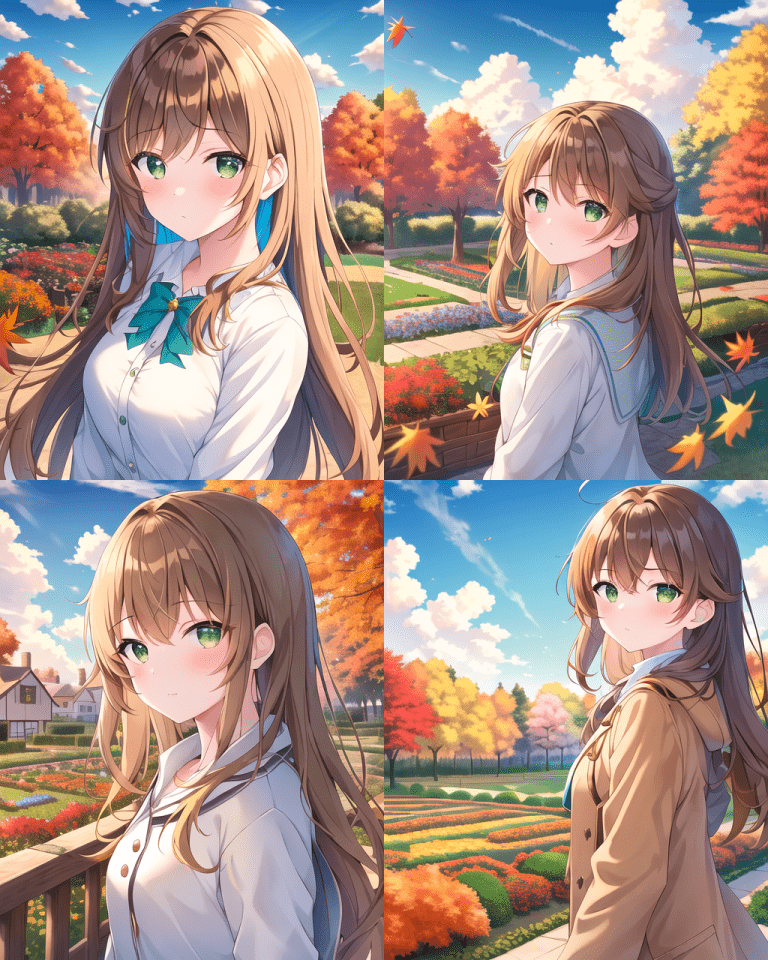

PastelBoys_2D

PastelBoys_2D is another powerful Stable Diffusion model which can amaze you with its results. It can be a fantastic utility if you are looking to generate a handsome anime male character. The model is better than its previous version, however, need some improvements in a few areas. Overall the model performance is impressive.

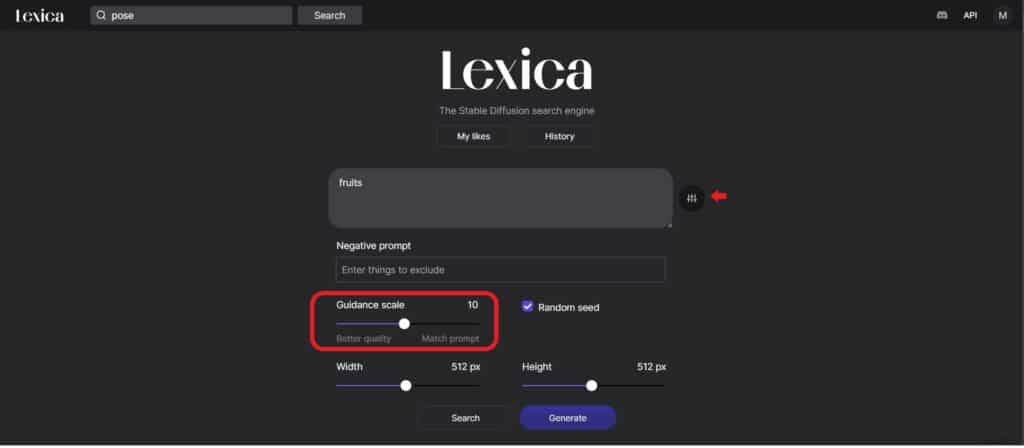

The best settings for the model to generate stunning outputs are as follows. Sampling method – Eular a / DPM++ SDE Karras, Clip skip – 2, Hires.fix upscaler – R-ESRGAN 4x+Anime6B, CFG Scale – 7~9, and VAE – vae-ft-mse-840000-ema-pruned / kl-f8-anime2.

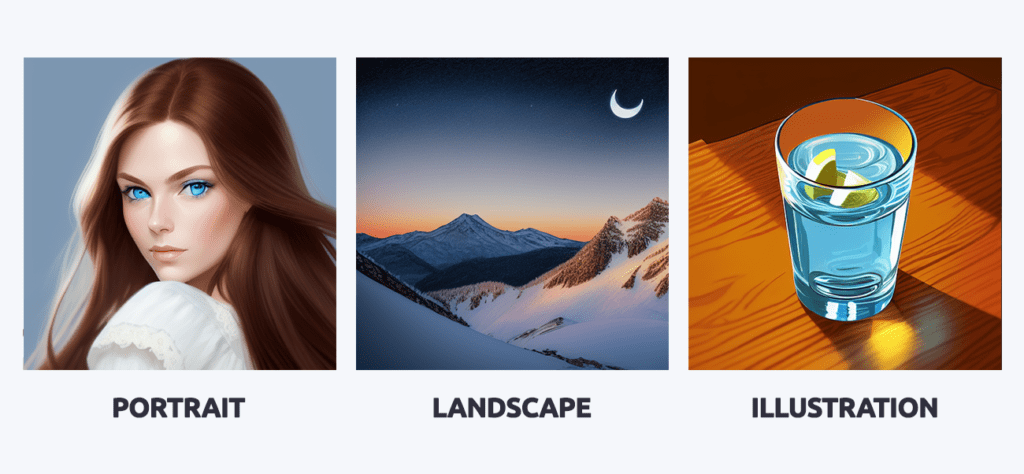

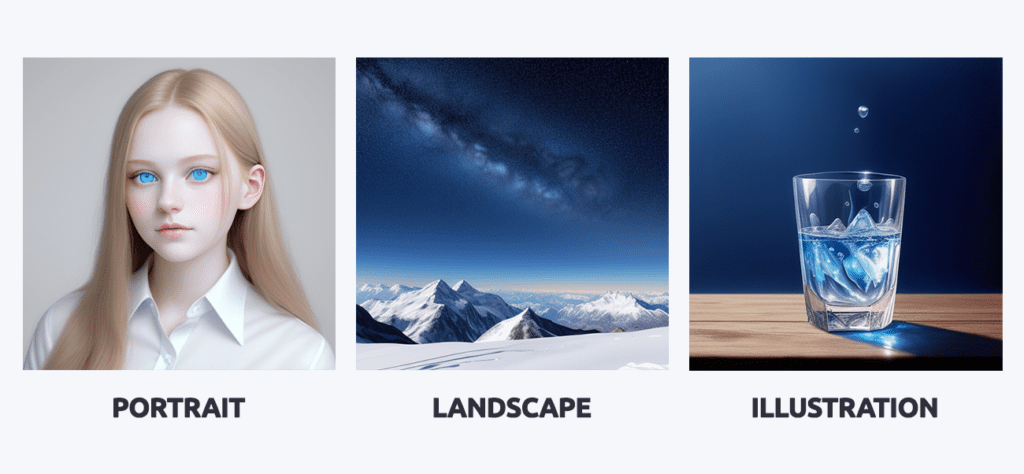

Pretty Boys

The Pretty Boys is the first realistic LORA Stable Diffusion men model which can help you create realistic male models. The AI model is trained to generate handsome faces without a beard. You can control the output of the model by using terms like Caucasian, Black, Asian, or Indian.

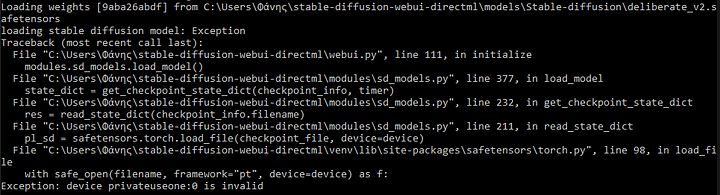

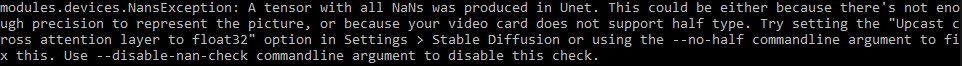

The Pretty Boys Stable Diffusion model is trained on Stable Diffusion 1.5. You can get better results if you use VAE sd-vae-ft-mse-original. You will need to update the WebUI by using git pull to use LORA’s in auto1111. It is worth noting the LORA file should be copied to stable-diffusion-webui/models/lora directory. The weight should be adjusted according to the instructions as well.

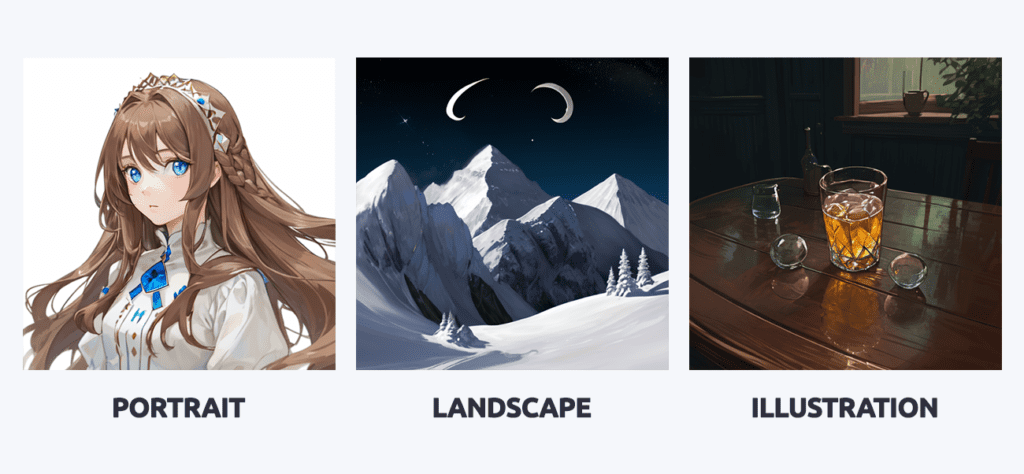

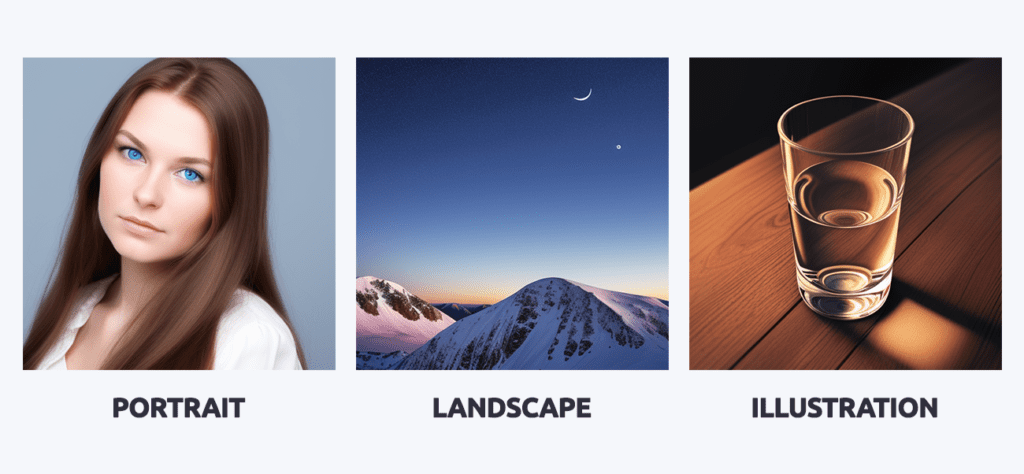

Refdalorange

Refdalorange can create the male characters with a perfect balance between 2D and 3D design. Although the model is trained to generate male characters, it can also generate female characters pretty well. The model uses orangemix.pt VAE, which is very effective for generating high-quality character designs.

The feature that sets Refdalorange apart from other Stable Diffusion men models is it can generate male characters in almost every situation. You can create your character as a warrior, as a scholar, or anything you want.

Conclusion

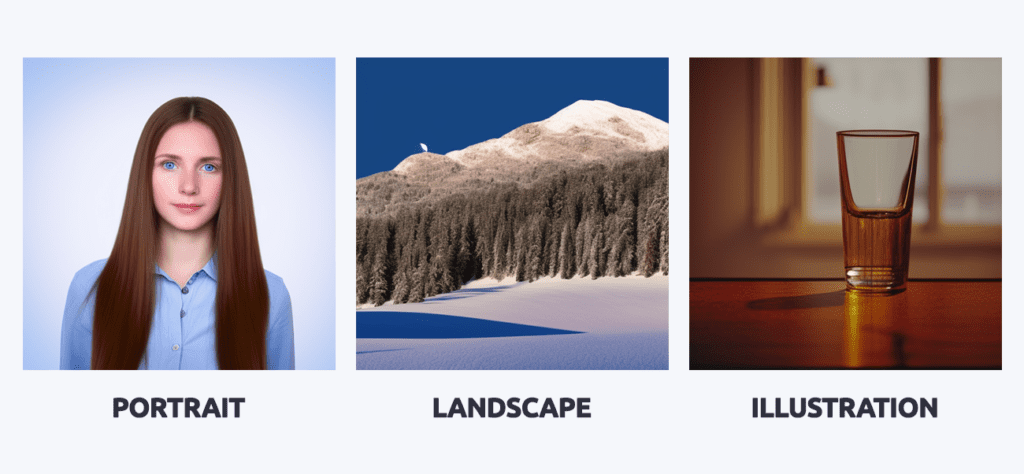

Stable Diffusion is an ocean of amazing output production ranging from imaginative sceneries to realistic human characters. The AI model offers tons of models which can be used for custom generation purposes. If you are looking to generate some male characters, you got tens of Stable Diffusion men models. These models range from 2D to 3D to realistic male characters. Each model is discussed with its pros and cons in the blog.